CUDA Support for WSL 2

https://docs.nvidia.com/cuda/wsl-user-guide/index.html#cuda-support-for-wsl2

CUDA on WSL :: CUDA Toolkit Documentation

Whether to efficiently use hardware resources or to improve productivity, virtualization is a more widely used solution in both consumer and enterprise space. There are different types of virtualizations, and it is beyond the scope of this document to delv

docs.nvidia.com

The lastest NVIDIA Windows GPU Driver will fully support WSL 2.

With CUDA support in the driver, existing applications (compiled elsewhere on a Linux system for the same target GPU)

can run unmodified with the WSL environment.

To compile new CUDA applications, a CUDA Toolkit for Linux x86 is needed.

CUDA Toolkit support for WSL is still in preview stage as developer tools such as profilers are not available yet.

However, CUDA application development is fully supported in the WSL 2 environment, as a result, users should be able to compile new CUDA Linux applications with the lastest CUDA Toolkit for x86 Linux.

Once a Windows NVIDIA GPU driver is installed on the system, CUDA becomes available within WSL 2.

The CUDA driver installed on Windows host will be stubbed inside the WSL 2 as libcuda.so,

therefore users must not install any NVIDIA GPU Linux driver within WSL 2.

One has to be very careful here as the default CUDA Toolkit comes packaged with a driver,

and it is easy to overwrite the WSL 2 NVIDIA driver with the default installation.

We recommend developers to use a separate CUDA Toolkit for WSL 2 (Ubuntu) available here to avoid this overwriting.

Installation Guide Linux :: CUDA Toolkit Documentation

Check that the device files/dev/nvidia* exist and have the correct (0666) file permissions. These files are used by the CUDA Driver to communicate with the kernel-mode portion of the NVIDIA Driver. Applications that use the NVIDIA driver, such as a CUDA ap

docs.nvidia.com

0. Remove CUDA files

$ sudo apt-get remove --purge '^nvidia-.*'

$ sudo apt-get remove --purge 'cuda*'

$ sudo apt-get autoremove --purge 'cuda*'

$ sudo rm -rf /usr/local/cuda

$ sudo rm -rf /usr/local/cuda-#.#

1. Prepare WSL

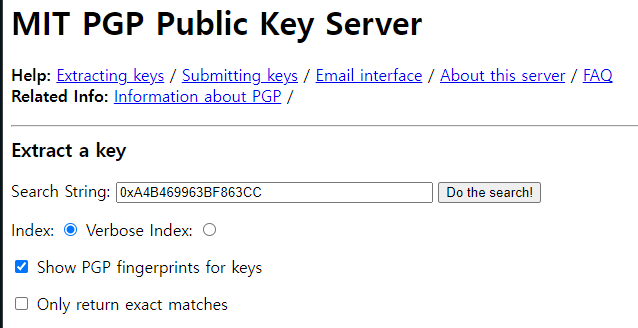

1.1. Remove Outdated Signing key:

$ sudo apt-key del 7fa2af80

2. Local Repo Installation for WSL

2.1. Install local repository on file system:

$ wget https://developer.download.nvidia.com/compute/cuda/repos/wsl-ubuntu/x86_64/cuda-wsl-ubuntu.pin

$ sudo mv cuda-wsl-ubuntu.pin /etc/apt/preferences.d/cuda-repository-pin-600

$ wget https://developer.download.nvidia.com/compute/cuda/11.7.1/local_installers/cuda-repo-wsl-ubuntu-11-7-local_11.7.1-1_amd64.deb

$ sudo dpkg -i cuda-repo-wsl-ubuntu-11-7-local_11.7.1-1_amd64.deb2.2. Enroll ephemeral public GPG key:

$ sudo cp /var/cuda-repo-wsl-ubuntu-11-7-local/cuda-96193861-keyring.gpg /usr/share/keyrings/

3. Network Repo Installation for WSL

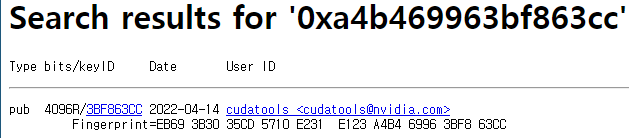

The new GPG public key for the CUDA repository (Debian-base distros) is 3bf863cc.

(https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/3bf863cc.pub)

This must be enrolled on the system, either using the cuda-keyring package or manually;

the apt-key command is deprecated and not recommended.

3.1. Install the newcuda-keyring package:

$ wget https://developer.download.nvidia.com/compute/cuda/repos/wsl-ubuntu/x86_64/cuda-keyring_1.0-1_all.deb

$ sudo dpkg -i cuda-keyring_1.0-1_all.deb

4. Common Installation Instructions for WSL

These instructions apply to both local and network installation for WSL.

4.1. Update the Apt repository cache:

$ sudo apt-get update4.2. Install CUDA SDK:

$ sudo apt-get -y install cuda

$ sudo apt-get -y install cuda-toolkit-11-7The installation instructions for the CUDA Toolkit can be found in the CUDA Toolkit download page for each installer.

But DO NOT choose the "cuda", "cuda-11-8", "cuda-drivers" meta-packages under WSL 2

as these packages will result in an attempt to install the Linux NVIDIA driver under WSL 2.

Install the cuda-toolkit-11-x metapackage only.

4.3. Perform the post-installation actions.

The post-installation actions must be manually performed.

These actions are split into mandatory, recommended, and optional sections.

5. Post-installation Actions

5.1. Mandatory Actions

Some actions must be taken after the installation before the CUDA Toolkit and Driver can be used.

5.1.1. Environment Setup

The PATH variable needs to include export PATH=/usr/local/cuda-11.7/bin${PATH:+:${PATH}}.

Nsight Compute has moved to /opt/nvidia/nsight-compute/ only in rpm/deb installation method.

When using .run installer it is still located under /usr/local/cuda-11.7/.

To add this path to the PATH variable:

$ export PATH=/usr/local/cuda-11.7/bin${PATH:+:${PATH}}In addition, when using the runfile installation method,

the LD_LIBRARY_PATH variable needs to contain

- /usr/local/cuda-11.7/lib64 on a 64-bit system, or

- /usr/local/cuda-11.7/lib on a 32-bit system

To change the environment variables for 64-bit operating systems:

export LD_LIBRARY_PATH=/usr/local/cuda-11.7/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}5.1.2 POWER9 Setup

Because of the addition of new features specific to the NVIDIA POWER9 CUDA driver,

there are some additional setup requirements in order for the driver to function properly.

These additional steps are not handled by the installation of CUDA packages,

and failure to ensure these extra requirements are met will result in a non-functional CUDA driver installation.

There are two changes that need to be made manually after installing the NVIDIA CUDA driver to ensure proper operation:

1. The NVIDIA Persistence Daemon should be automatically started for POWER9 installations.

Check that it is running with the following command:

$ sudo systemctl status nvidia-persistenced

2. Disable a udev rule installed by default in some Linux distributions

that cause hot-pluggable memory to be automatically onlined when it is physically probed.

This behavior prevents NVIDIA software from bringing NVIDIA device memory online with non-default settings.

This udev rule must be disabled in order for the NVIDIA CUDA driver to function properly on POWER9 systems.

'OS > Linux' 카테고리의 다른 글

| Linux Shell Script (0) | 2023.08.09 |

|---|---|

| LD_LIBRARY_PATH (0) | 2022.12.21 |

| Fastest way to check if a file exists (0) | 2022.11.10 |

| Install libjpeg-turbo (0) | 2022.11.06 |

| CUDA-11.4 on WSL2 (0) | 2022.10.12 |